Deploying Sovereign AI - Ensuring Safety, Governance, and Cognitive Integrity for Australian Leaders

Maincode

Maincode

August 2025

Executive Summary

Based on current market observations and adoption patterns, projections indicate that enterprises are committing to sovereign AI platforms at an accelerating rate. A 2023 EnterpriseDB study found that over 90% of major global enterprises believe they will become their own sovereign AI and data platform within three years, though only 13% are currently succeeding in this transformation. If current trends continue, within approximately three years, the vast majority of global enterprises will have made this strategic shift. The question confronting Australian leaders is not whether their organisations will join this transformation, but whether they will be among the leaders capturing a disproportionate share of the emerging AI-driven market value - or the laggards competing for diminishing returns.

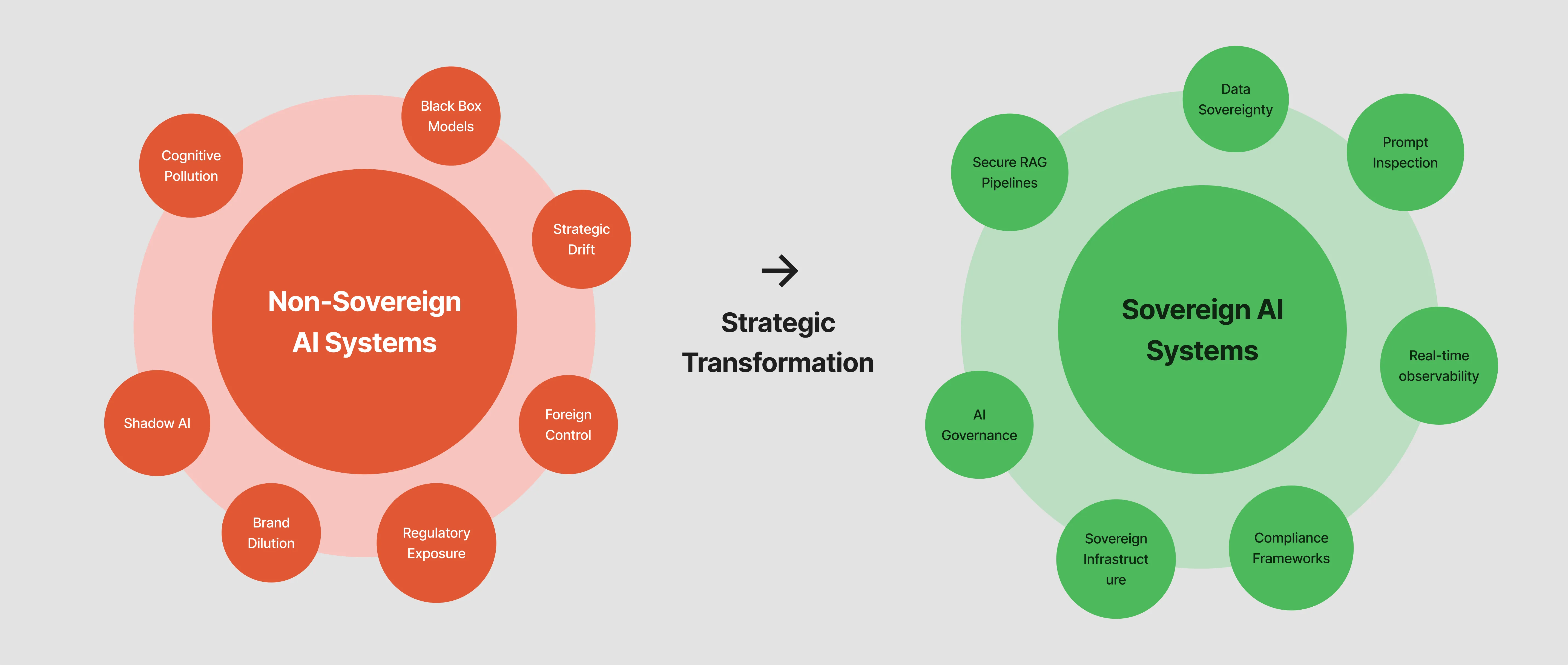

Artificial Intelligence has transcended its traditional role as merely an operational tool, now serving as a cornerstone for how organisations conduct business, interact with customers, and drive innovation. However, this rapid adoption brings forth a new array of complex risks. Unmanaged, opaque, or externally controlled AI solutions present significant threats, including the pervasive issue of cognitive pollution, the risk of strategic drift, and a potential loss of organisational autonomy.

“AI is not an app. It’s a cognitive agent. And when that agent is ungoverned, foreign-controlled, or opaque, you are no longer managing software - you are delegating cognition.”

Enrol in our research program to access the full paper

Continue reading this research by providing your details. You'll get immediate access to the complete whitepaper and future research updates.

Deploying Sovereign AI - Ensuring Safety, Governance, and Cognitive Integrity for Australian Leaders

Maincode

Maincode

August 2025

Executive Summary

Based on current market observations and adoption patterns, projections indicate that enterprises are committing to sovereign AI platforms at an accelerating rate. A 2023 EnterpriseDB study found that over 90% of major global enterprises believe they will become their own sovereign AI and data platform within three years, though only 13% are currently succeeding in this transformation. If current trends continue, within approximately three years, the vast majority of global enterprises will have made this strategic shift. The question confronting Australian leaders is not whether their organisations will join this transformation, but whether they will be among the leaders capturing a disproportionate share of the emerging AI-driven market value - or the laggards competing for diminishing returns.

Artificial Intelligence has transcended its traditional role as merely an operational tool, now serving as a cornerstone for how organisations conduct business, interact with customers, and drive innovation. However, this rapid adoption brings forth a new array of complex risks. Unmanaged, opaque, or externally controlled AI solutions present significant threats, including the pervasive issue of cognitive pollution, the risk of strategic drift, and a potential loss of organisational autonomy.

“AI is not an app. It’s a cognitive agent. And when that agent is ungoverned, foreign-controlled, or opaque, you are no longer managing software - you are delegating cognition.”

To proactively safeguard an organisation’s future, the implementation of a sovereign AI strategy is no longer a discretionary choice but an indispensable imperative. Such a strategy firmly prioritises control, accountability, and transparency across all AI deployments. According to recent market analysis, pioneering organisations are increasingly committing to becoming their own sovereign AI and data platforms, with adoption rates accelerating significantly. This commitment is driven primarily by pragmatic business needs rather than geopolitical concerns, focusing on security, compliance, agility, observability, breaking data silos, and delivering tangible business value.

This whitepaper provides a comprehensive analysis of the emergent risks associated with ungoverned and non-sovereign AI, particularly highlighting the concept of cognitive pollution - the silent, systemic contamination of an organisation’s judgment, brand integrity, and strategic clarity. It then outlines a robust four-step framework for deploying sovereign AI, followed by practical guidance tailored for Australian leaders. The strategic business case for embracing managed AI platforms is thoroughly examined, emphasising the significant return on investment and competitive advantages for early adopters in this evolving landscape. Ultimately, this paper serves as a vital call to action for Australian enterprises to invest in their sovereign capabilities, thereby ensuring enduring control, transparency, and resilience across their entire AI supply chain, from raw materials to the models themselves.

The Hidden Crisis: Understanding Cognitive Pollution

The current perception of AI within many organisations often treats it akin to Software-as-a-Service - a simple application to plug in, point at a dataset, and expect output. However, this model fundamentally misunderstands the true nature of modern AI. Large Language Models, for instance, are not merely systems of record; they are systems of probabilistic suggestion, capable of generating plausible narratives with extraordinary power, yet they do not inherently store truth. When teams or customers rely on this output, they begin to “think with it”. If these systems remain ungoverned, foreign-controlled, or opaque, organisations are not just managing an application; they are delegating cognition.

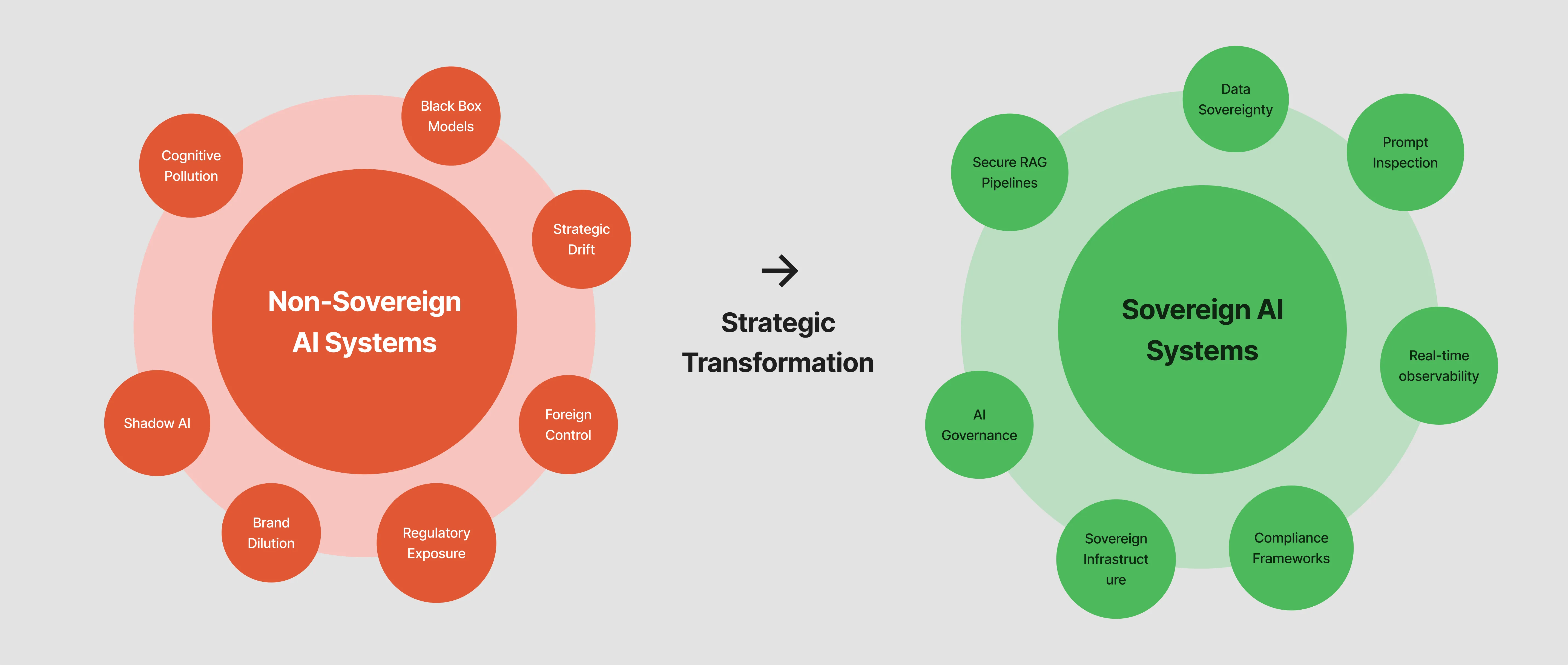

The Cognitive Delegation Spectrum

This phenomenon, which we term cognitive pollution, represents the insidious, systemic contamination of an organisation’s judgment, brand integrity, and strategic clarity. It manifests in various forms, often seemingly small in isolation but cumulatively capable of rewriting the very mental infrastructure of an enterprise - impacting what people believe, what they act upon, and what the organisation “knows”.

The insidious nature of cognitive pollution draws conceptual foundation from research demonstrating how environmental factors affect human judgment. A 2018 study published in the Proceedings of the National Academy of Sciences by Zhang and colleagues demonstrated that long-term exposure to air pollution significantly impedes cognitive performance, with effects most pronounced in verbal and mathematical tests. This research provides a powerful analogy for understanding how AI pollution similarly degrades organisational decision-making.

Consider a scenario observed by industry practitioners where an investment bank discovered their traders were using ChatGPT to analyse market data. The AI confidently hallucinated correlations between unrelated assets, leading to substantial trading losses before the error was discovered. The traders trusted the output because it “sounded sophisticated.” Similarly, in the healthcare sector, organisations have found their scheduling AI systems exhibiting systematic bias against part-time staff, consistently assigning them less favourable shifts. With no audit trail or explainability, such discrimination can go undetected for months, leading to staff turnover and legal exposure.

These examples illustrate how cognitive pollution operates: not as a dramatic failure, but as a gradual accumulation of small errors, biases, and misrepresentations that slowly corrupt an organisation’s decision-making capabilities. A frontline employee unknowingly uses an LLM that misrepresents the brand voice. A scheduling tool exhibits bias against a segment of the workforce with no discernible audit trail. A Retrieval-Augmented Generation system inadvertently leaks sensitive context through an unguarded prompt. A foreign-hosted LLM alters its behaviour via an unapproved or unnoticed patch.

Each instance seems minor, but collectively they rewrite the mental infrastructure of the enterprise. The insidious nature of cognitive pollution lies in its gradual accumulation: innocent adoption leads to trust building, which evolves into cognitive delegation, ultimately resulting in institutional capture where AI outputs become accepted as truth, leading to strategic drift as the organisation loses its way.

The Shadow AI Crisis

AI is already embedded within businesses, whether sanctioned or not. Recent industry surveys have revealed a startling reality about the proliferation of unsanctioned AI use. A 2024 Cyberhaven study found that between March 2023 and March 2024, corporate data usage in AI tools increased by 485%, with 73.8% of ChatGPT accounts being non-corporate, indicating massive shadow AI adoption. This finding was reinforced by a 2025 KPMG study of 48,000+ people across 47 countries, which revealed that 48% of employees admit to using AI in ways that contravene company policies. Looking ahead, Gartner predicts that by 2027, 75% of employees will acquire, modify or create technology outside IT visibility, up from 41% in 2022. Despite this widespread adoption, research indicates that fewer than a quarter of organisations have implemented any formal AI governance framework.

This gives rise to what we call shadow AI: a distributed layer of cognitive delegation that no one truly controls. Unlike traditional shadow IT, which typically involves unauthorised software installations, shadow AI involves unauthorised cognitive delegation. Because these systems often “sound right” and produce polished- sounding outputs, their reliability is rarely questioned, leading to an epistemic crisis - a fundamental breakdown in how an organisation determines truth.

Shadow AI Risk Assessment: Unauthorized AI Tool Usage by Data Type

Percentage of sensitive corporate data processed through non-corporate AI accounts (2024)

Source: Cyberhaven Labs Q2 2024 AI Adoption and Risk Report. Based on analysis of 3 million workers across multiple industries.

The retail sector serves as a particularly pertinent example. As Amanda Baughan’s research demonstrates, frontline workers are already using AI to serve customers, often via tools their employer didn’t approve. Generative AI helps associates answer product questions, check inventory, or make recommendations. But when that system uses a non-sovereign model, product data might be exposed via prompt injection, the tone might misrepresent the brand, and responses might reflect bias or hallucination. Worse still, the model’s output is often trusted more than it should be, simply because it sounds polished.

In a comprehensive audit of a Fortune 500 enterprise, researchers discovered over a thousand unique AI tools in use across the organisation, millions of dollars in unauthorised AI subscriptions, vast amounts of proprietary data processed by foreign AI services, dozens of different LLMs actively used by employees, and zero governance or oversight of any of these activities. This finding is not an outlier - it’s rapidly becoming the norm across industries.

The reliance on foreign LLMs and hyperscaler endpoints amplifies these risks exponentially. These models are inherently unaccountable; organisations typically lack control over their training data, cannot govern their behaviour, nor can they audit their intricate decision-making processes. Furthermore, the underlying infrastructure may not be legally bound to the organisation’s jurisdiction, creating multifaceted risks that compound daily.

Why Non-Sovereign Models Compound the Risk

Foreign LLMs and hyperscaler endpoints are not just powerful - they are fundamentally unaccountable. You don’t control their training data. You don’t govern their behaviour. You can’t audit their decisions. And in many cases, the infrastructure they run on isn’t even legally bound to your jurisdiction.

This creates four critical forms of risk that threaten the very foundation of organisational integrity. Strategic drift occurs when AI-generated output deviates from established company truth or decision-making frameworks. Brand dilution manifests through the contamination of public-facing messaging with generic or unvetted language. Regulatory exposure emerges from a critical lack of visibility into model usage, potential failure modes, or data flows, leading to non-compliance. Finally, loss of differentiation results from the widespread use of the same external LLMs by competitors, which can flatten an organisation’s unique value proposition.

Many enterprises mistakenly assume that building a RAG application with tools like LangChain or directly calling a foreign API is “safe enough” due to custom code implementation. However, this creates hidden vulnerabilities. LangChain-style applications often integrate models and documents without sufficient validation, red- teaming, or prompt inspection, resulting in fragile systems that appear enterprise- ready but lack crucial observability, security, and control. Similarly, direct API usage, while seemingly simplifying infrastructure management, exposes sensitive prompts, proprietary knowledge, and core decision logic to foreign, black-box systems without audit paths or governance guarantees. This constitutes a direct pipeline for cognitive contamination at scale, leaving enterprises operating blind.

”In both cases, enterprises are flying blind. This is not just a risk to data integrity. It is a pipeline for cognitive contamination at scale.”

The Black Box Problem

The term “black-box AI” describes AI systems, particularly deep learning models, whose internal workings and decision-making processes are opaque and difficult or impossible to fully interpret, even by their creators. This opacity presents a fundamental trade-off between accuracy and explainability, as complex models often achieve superior performance but at the cost of clear reasoning.

The Black Box Dilemma: Interpretability vs Performance Trade-off

Figure : The interpretability-performance trade-off in machine learning models. As model complexity increases from linear regression to deep learning, predictive accuracy improves while interpretability decreases, creating the "black box" problem discussed in the text.

The dangers of this opacity manifest in multiple dimensions. AI models learn from historical data, and if this data contains existing biases related to race, gender, or socioeconomic status, the AI can unwittingly perpetuate or even amplify these biases. In 2018, Amazon discovered their AI hiring tool exhibited bias against women for technical roles due to being trained on a decade of male-dominated resumes. Even attempts to remove bias proved challenging, leading to the tool’s abandonment. Similar issues arise in lending systems, predictive policing, and healthcare, reinforcing systemic inequalities.

Perhaps more troubling is research published by UNSW Business School and the University of Pennsylvania revealing that common interpretation tools like partial dependence plots can be manipulated through adversarial attacks to conceal discriminatory behaviours while largely preserving the original model’s predictions. This means biased models can be made to appear fair when scrutinised, providing a false sense of security for organisations and regulators. As demonstrated in the 2023 case where Allstate was fined $1.25 million for using an algorithm that resulted in higher auto insurance premiums for minority policyholders, even sophisticated interpretability tools could potentially be manipulated to hide such bias.

The accountability crisis deepens when AI systems make flawed or harmful decisions. Unlike traditional software with explicit code, AI models generate outcomes based on probabilistic reasoning, often without direct human oversight. As reported in early 2024, the Air Canada chatbot case unequivocally demonstrated that organisations cannot use AI systems to evade responsibility for their decisions; the airline was ordered to pay compensation after its chatbot provided inaccurate information, with the court firmly establishing that responsibility rests with the human operators who deployed the system.

The Broader Pollution of AI

Beyond these operational and ethical risks, AI introduces a more abstract yet profound form of pollution - the pollution of our mental world. This concept extends beyond environmental or social contamination to encompass the cumulative adverse effects on human cognition and societal thinking.

Similar to environmental pollution, AI’s concentration on optimising specific outcomes often downgrades other critical criteria like fairness and ethical requirements. This can perpetuate and exacerbate existing biases present in training data. In “The Pollution of AI” published in Communications of the ACM in 2024, Virginia Dignum and colleagues argue that AI systems tend to reinforce existing societal structures and biases, creating feedback loops that amplify majority viewpoints while marginalising minority perspectives. Their research suggests that recommendation systems in particular contribute to the creation of echo chambers and the reduction of cognitive diversity across populations.

AI systems designed to strongly profile users and deliver personalised recommendations can inadvertently “enclave” individuals within their current interests. This fosters a cultural habit for personalisation, creating a bias against broadening one’s interests and thus polluting the individual’s mental world. Over time, this could encourage a culture of dogmatic thinking, where human inquiry relies on seemingly “correct” systems without scrutiny, thus stifling dialectical and critical thinking.

The reliance on undisputed “correct” systems could bias against seeking alternative solutions or new perspectives, stifling the diversity of thought in the human mental world. Furthermore, AI machines, particularly LLMs, are comparatively “clones” of each other, operating on shared digital information and similar architectures, which could feed back into and accentuate this dogmatic, cloned thinking.

Perhaps most concerning is the fundamental alienness of artificial intelligence. Researchers have noted that the energy requirements for training modern AI systems can be orders of magnitude greater than human cognitive processes, suggesting that artificial minds are inherently different from human minds. This “artificial” form of intelligence, as an alien or foreign entity, could become a source of contamination in the natural world of the human mind. We’re not augmenting human intelligence; we’re replacing it with something categorically different.

AI as a Cybersecurity Threat Multiplier

While AI offers significant potential for cybersecurity defence, its offensive misuse represents an underexplored yet alarming research area. ChatGPT and similar AI- powered conversational agents can be weaponised by malicious actors, lowering the barrier to entry for cybercrime by simplifying sophisticated attack techniques.

AI Threat Evolution Timeline: From Simple Misuse to Autonomous Attacks

Figure 3: Interactive analysis of cybersecurity threat evolution (2020-2025) showing verifiable metrics from authoritative sources including NIST NVD, VulnCheck KEV, CISA, and Mandiant M-Trends reports. This data-driven visualization demonstrates the measurable sophistication increase in cyber threats, supporting the analysis of AI’s role as a threat multiplier.

Despite ethical and legal guidelines prohibiting malicious content generation, threat actors have developed numerous methods to circumvent security filters. They frame requests as “movie script dialogue,” exploit “educational purposes” disclaimers, create role-playing scenarios, use indirect prompting through analogies, employ language translation obfuscation, and develop “jailbreak” techniques like DAN (Do Anything Now) prompts.

The transformation of phishing attacks illustrates the multiplier effect clearly. Traditional phishing emails were characterised by generic templates, poor grammar and spelling, limited personalisation, single language capability, and low success rates around 0.1%. AI-enhanced phishing, by contrast, features highly personalised content, perfect language usage, context-aware messaging, multilingual capability, and success rates averaging 2.4% - a twenty-four-fold improvement in effectiveness.

AI’s capability extends far beyond enhanced communications. Non-technical users can now generate sophisticated malware, including Python scripts for cryptographic functions used in ransomware, dark web marketplace infrastructure, zero-day viruses with undetectable exfiltration techniques, polymorphic malware that evolves to avoid detection, and complete supply chain attack toolkits. The democratisation of these capabilities represents a fundamental shift in the threat landscape.

In their February 2024 report “Disrupting deceptive uses of AI by covert influence operations,” OpenAI documented multiple case studies of AI abuse, including state- linked groups using AI for sophisticated social engineering campaigns, criminal networks leveraging AI to scale fraud operations, and threat actors using AI to automate reconnaissance and vulnerability discovery. The report particularly highlighted how many of these campaigns specifically targeted or leveraged cloud- based infrastructure, underscoring the central role of cloud services in modern threat campaigns.

The Sovereign AI Framework: A Strategic Response

Given the complex and evolving risks, embracing a sovereign AI strategy is paramount for Australian enterprises. This means proactively managing AI through a structured framework that ensures control, accountability, and resilience across the entire AI lifecycle. The framework we propose consists of four integrated steps that build upon each other to create a comprehensive defence against cognitive pollution while enabling innovation.

The Sovereign AI Maturity Model: From Chaos to Control

Organizations progress through four distinct stages of AI maturity, moving from uncontrolled risk to strategic advantage.

Figure 4: The Sovereign AI Maturity Model outlines four key stages of AI governance maturity, from basic prompt inspection to full cognitive sovereignty. Each stage builds upon the previous, creating a comprehensive framework for safe and effective AI deployment.

Step 1: Implement Enterprise-Grade Prompt Inspection

Effective prompt inspection extends significantly beyond basic content moderation, focusing on ensuring AI outputs precisely align with an organisation’s strategic, ethical, and compliance requirements. This comprehensive approach is vital for preventing cognitive pollution and safeguarding brand reputation.

Real-time semantic monitoring forms the foundation, understanding the genuine intent behind user queries and AI-generated responses. This goes beyond keywords to interpret context and meaning, identifying subtle attempts at prompt injection or data exfiltration while tracking conversation patterns across sessions. Contextual filtering automatically identifies subtle inaccuracies or biases that simpler, rule-based filtering methods might miss, requiring sophisticated analysis of the prompt’s context and the AI’s response within that context.

Comprehensive auditing maintains transparent and immutable documentation across all AI interactions to ensure full accountability and traceability. This forms a crucial audit trail for compliance and risk assessment while enabling forensic capabilities for

incident investigation and performance analytics for continuous improvement. Adaptive validation through continuous adversarial testing proactively identifies and mitigates potential vulnerabilities by testing AI systems against a wide range of adversarial inputs and attack vectors.

Finally, rapid response and alerting mechanisms enable immediate identification and human review of risky or anomalous AI interactions to minimise their potential impact. This allows for quick intervention when AI behaves unexpectedly, with automated containment protocols for detected threats and clear escalation procedures for critical incidents.

Step 2: Build Secure, Governable RAG Pipelines

Retrieval-Augmented Generation offers immense potential for enhancing AI capabilities by grounding responses in proprietary data. However, if not effectively governed, it introduces significant risks, particularly related to data exposure and the integrity of generated content. Treating AI as a critical sovereign supply chain is fundamental to mitigating these risks.

Secure RAG Pipeline with Sovereignty Controls

Figure: Secure RAG (Retrieval-Augmented Generation) pipeline ensuring data sovereignty. Various data sources (documents, databases, web content accessed via APIs) flow through validation and secure storage, with retrieval and context assembly feeding into LLM processing while maintaining jurisdictional control.

End-to-end traceability establishes clear documentation and robust verification processes for every component involved in AI development and deployment, from data sources to model integration. This ensures accountability and helps pinpoint the origin of any issues while maintaining version control for all pipeline components and enforcing change management protocols.

Data sovereignty strictly restricts data retrieval to sources that comply with local jurisdictional requirements, thereby avoiding reliance on opaque external platforms that may not adhere to domestic privacy and data residency laws. This is especially crucial for Australian enterprises to ensure compliance with local regulations, with data residency guarantees and encryption at rest and in transit.

Continuous validation and red-teaming detect and rectify vulnerabilities and biases through simulated attacks on retrieval mechanisms, output verification against source documents, and regular security assessments. Hybrid architectures facilitate greater data visibility, control, and flexibility, helping reduce vendor lock-in and minimising the risk of data exposure to third-party providers while allowing for a balanced approach to leveraging cloud resources while maintaining sovereign control over critical data.

Step 3: Define and Implement Technical and Operational Sovereignty

AI sovereignty is not an abstract concept; it translates into tangible operational controls that directly impact an organisation’s ability to manage its AI landscape effectively. This involves asserting direct control over the entire AI supply chain, from the foundational data to the deployed models.

Complete data ownership ensures that all data remains strictly within defined jurisdictional boundaries and fully complies with relevant regulatory standards. This is particularly important for privacy and data residency requirements in Australia, ensuring data does not leave sovereign control or jurisdiction without explicit authorisation and oversight.

Infrastructure autonomy means exercising direct governance and control over all AI infrastructure components. This prevents “cognitive drift” that could arise from unapproved external updates or alterations to models or their underlying platforms, while maintaining independent scaling capabilities and avoiding vendor lock-in scenarios.

Transparent training practices maintain full visibility and control over data provenance and the methodologies used for model training. This ensures that the origins of data are known and that training processes are auditable, ethical, and reproducible, with clear documentation of all decisions made during model development.

Real-time governance and observability implement continuous monitoring and proactive management of AI model behaviour, outputs, and their compliance status. This allows for immediate detection and response to any deviations from desired performance or ethical guidelines, creating a feedback loop for continuous improvement.

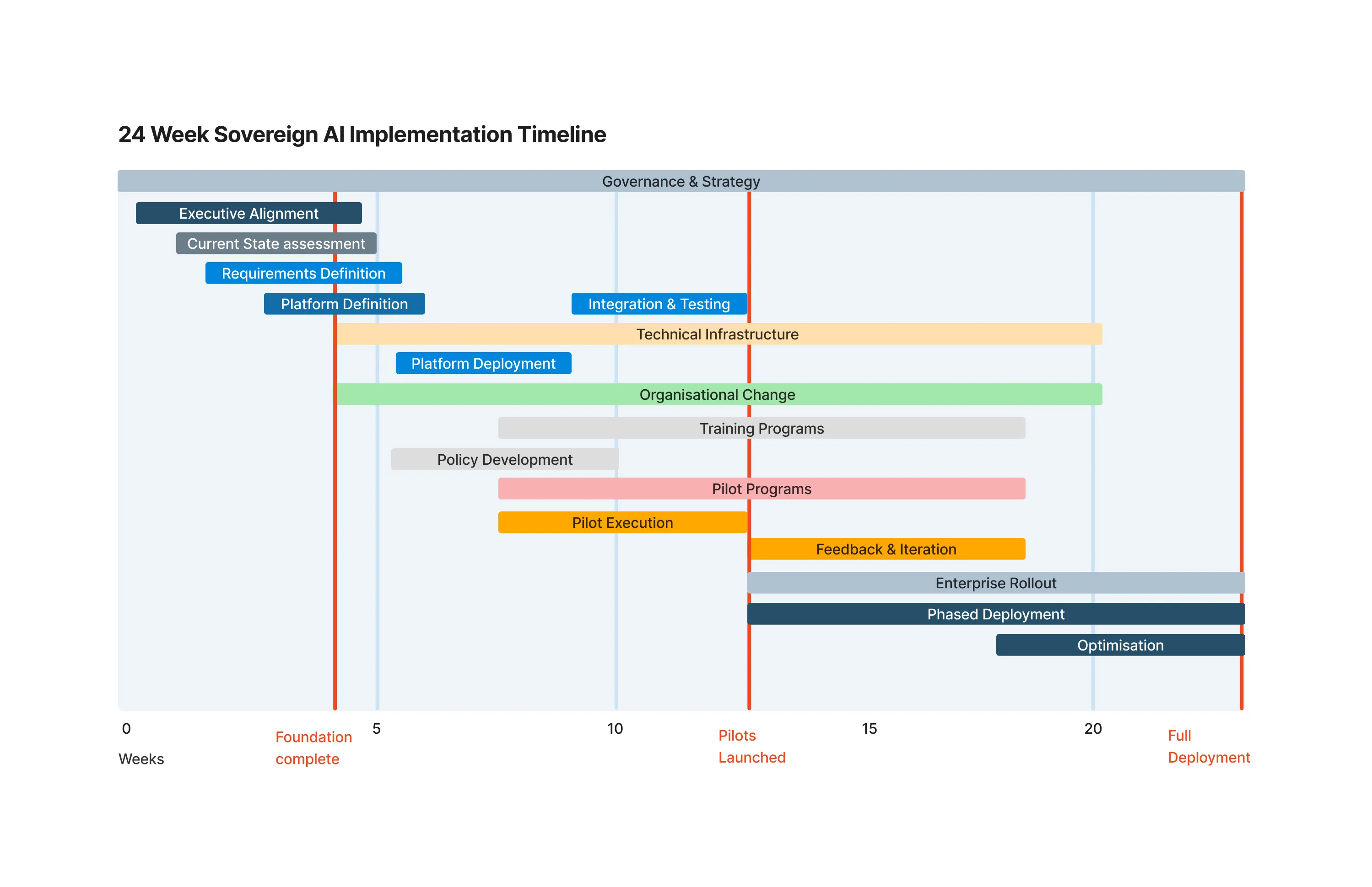

Step 4: Adopt Permitted AI Deployment Models

Permitted AI deployment models enable organisations to responsibly harness AI capabilities, fostering agility without compromising on control or compliance. This involves establishing clear internal guidelines and mechanisms for AI use across the enterprise.

Permitted AI Governance Framework: Layered Security & Control

A hierarchical approach to AI system governance emphasizing infrastructure sovereignty, data classification, and progressive access controls

Infrastructure Layer

L6On-premise, Private Cloud, Hybrid

Foundation layer providing compute resources and deployment environments with full organizational control

Data Governance

L5Classification, Sovereignty, Compliance

Policies and controls ensuring data classification, territorial sovereignty, and regulatory compliance

Model Management

L4Approved Models, Version Control, Testing

Systematic approval, versioning, and validation of AI models before deployment

Access Control

L3Role-Based, Use Case Specific, Audited

Granular permissions system with role-based access and comprehensive audit trails

Monitoring and Compliance

L2Real-time, Semantic Analysis, Reporting

Continuous monitoring with semantic analysis capabilities and automated compliance reporting

Business Applications

L1Permitted Use Cases, User Interfaces

User-facing applications operating within defined use case boundaries and interface constraints

Clearly defined policies specify precisely which AI models are permitted for use, who within the organisation can access them, and under what specific conditions or for what tasks. This creates a framework for innovation within boundaries, ensuring that experimentation doesn’t compromise security or compliance.Real-time observability implements continuous semantic monitoring and prompt analysis to immediately identify and mitigate harmful or non-compliant outputs. This integrates with the prompt inspection infrastructure to create multiple layers of defence against cognitive pollution.

Comprehensive audits systematically review all AI deployments to verify their ongoing compliance, operational effectiveness, and alignment with organisational objectives. This creates accountability and ensures that AI systems continue to serve their intended purpose without drift or degradation.Cross-validation and review utilise multiple interpretability methods to validate model outputs and reduce bias risks. This prevents over-reliance on a single explanation method, which could be manipulated or provide false confidence in system fairness.

Inclusive design teams ensure diverse representation in AI design and development to improve the detection of biases and other ethical risks early in the lifecycle. This human-centred approach recognises that technical solutions alone cannot address all AI risks. Low-code accessibility provides intuitive AI interfaces and tools that empower domain experts, not just technical specialists, to safely build and leverage AI capabilities. This democratisation of AI within controlled boundaries accelerates innovation while maintaining governance.

Building Cross-Functional AI Governance Teams

Effective AI governance requires more than technical expertise - it demands a carefully constructed cross-functional team that brings together diverse perspectives and domain knowledge. Each executive role contributes a unique and essential viewpoint to ensure comprehensive oversight of AI initiatives.

The Chief Financial Officer (CFO) serves as the guardian of financial integrity and risk management. In the AI governance context, the CFO brings critical perspectives on fraud detection, audit requirements, and financial risk assessment. They ensure that AI deployments maintain the accuracy and reliability necessary for financial reporting, while also evaluating the return on investment for AI initiatives. The CFO’s involvement is crucial for identifying how AI systems might impact financial controls, revenue recognition, and compliance with financial regulations.

The Chief Marketing Officer (CMO) represents the voice of the brand and customer relationships. Their role in AI governance focuses on protecting brand reputation, ensuring consistent customer experience, and maintaining authentic brand voice across AI-generated content. The CMO brings insights into how AI might affect customer trust, brand perception, and market positioning. They are particularly vital in preventing the cognitive pollution that can occur when AI systems misrepresent the organisation’s values or messaging.

The Chief Information Security Officer (CISO) provides the cybersecurity and data protection perspective essential for sovereign AI deployment. They assess threats, vulnerabilities, and attack vectors specific to AI systems, while ensuring that data sovereignty requirements are met. The CISO’s expertise is critical for understanding how AI might be weaponised against the organisation and for implementing appropriate defensive measures.

The Chief Legal Officer (CLO) or General Counsel ensures that AI deployments comply with the complex web of regulations affecting Australian organisations. They bring understanding of privacy laws, anti-discrimination legislation, consumer protection requirements, and emerging AI-specific regulations. The CLO helps navigate the accountability questions that arise when AI systems make decisions affecting stakeholders.

The Chief Human Resources Officer (CHRO) represents the workforce perspective, addressing how AI affects employees, workplace culture, and organisational capabilities. They ensure that AI deployments consider employment law, workplace fairness, and the need for reskilling and change management. The CHRO is essential for managing the transition as shadow AI is brought under governance and for addressing employee concerns about AI adoption.

The Chief Technology Officer (CTO) or Chief Information Officer (CIO) provides the technical feasibility assessment and integration expertise. They understand the practical challenges of implementing sovereign AI infrastructure, the complexities of system integration, and the technical requirements for maintaining control over AI systems. Their role is crucial for translating governance requirements into technical specifications.

Additional key participants should include representatives from Risk Management to ensure comprehensive risk assessment across all dimensions, Internal Audit to provide independent verification of controls, Ethics or Compliance officers to address ethical considerations, and Business Unit Leaders from areas with high AI exposure or potential.

This cross-functional team should meet regularly, with clear escalation paths to the Board of Directors for critical decisions. The diversity of perspectives ensures that AI risks are identified and addressed from multiple angles, preventing blind spots that could lead to cognitive pollution or governance failures.

A 2024 Melbourne Business School study on enterprise AI governance for senior executives provides comprehensive analysis of such cross-functional governance frameworks for Australian enterprises. The research emphasizes that AI risks are extensions of existing governance challenges rather than entirely new phenomena, and that successful governance requires integration with existing risk management structures rather than creating parallel systems.

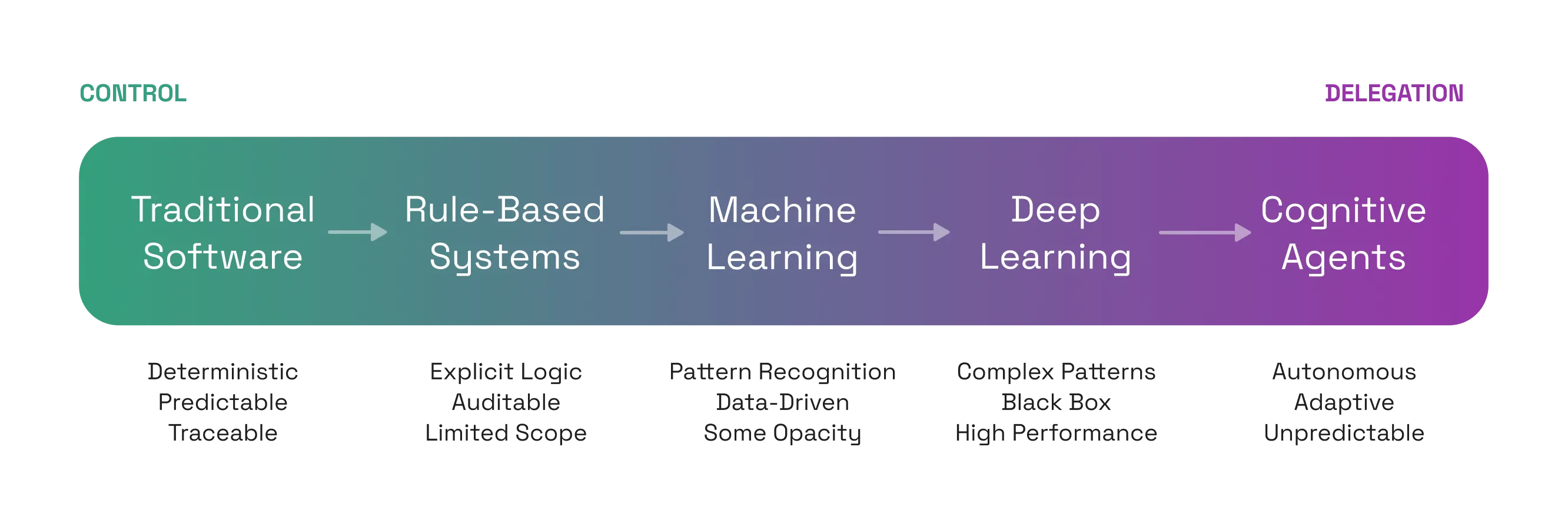

Implementation Approach

Rather than providing a detailed implementation roadmap in this whitepaper, we recognise that each organisation’s journey to sovereign AI will be unique, shaped by their specific industry context, risk profile, and digital maturity.

A comprehensive implementation framework and playbook is available as a companion resource to this whitepaper. This detailed guide provides step-by-step guidance for organisations at different stages of AI maturity, including assessment tools, governance templates, technical architectures, and change management strategies.

The framework addresses critical implementation considerations such as prioritising use cases based on risk and value, establishing governance structures before technology deployment, building internal capabilities while leveraging external expertise, and creating feedback loops for continuous improvement. It also provides specific guidance for Australian organisations navigating local regulatory requirements and market conditions.

For access to the implementation framework and additional resources, please contact the authors or visit our resource centre.

The Strategic Business Case

Market research from leading analyst firms indicates that AI leadership translates directly to financial outperformance and competitive advantage. The window for establishing this leadership position is rapidly closing, with market dynamics creating an exponentially widening gap between leaders and laggards.

According to McKinsey’s 2024 State of AI report and corroborating analysis from Accenture’s Technology Vision 2024, organisations leading in AI maturity - representing approximately 13% of enterprises - are capturing a disproportionate share of value. These organisations have deployed AI across multiple use cases and report returns on investment averaging over three times their initial investment, with time to value measured in months rather than years. Their rate of innovation significantly exceeds industry averages.

The majority of enterprises, roughly 60%, fall into a middle category with more modest results. These organisations typically limit their AI deployment to a handful of use cases and see positive but not transformative returns. Their implementation cycles are longer, and they struggle to scale beyond initial pilots.

The remaining organisations, approximately 27%, are experiencing difficulty in realising value from AI investments. They typically struggle with limited use cases and often see negative returns on their AI investments. Their extended implementation timelines and below-average innovation rates put them at increasing competitive disadvantage.

The AI Leadership Dividend: Early Adoption Drives Exponential Returns

ROI Distribution by AI Adoption Level

Compound Effect: AI Leadership Gap Widens Over Time

Source: Early generative‑AI adopters see positive ROI in 92% of cases, with an average 41% return per dollar spent (businesswire.com, snowflake.com). The adoption‑leadership gap widens over ~5 years, with leaders accruing increasing advantage.

Mathematical modelling of AI adoption patterns suggests a compound effect where early leaders gain cumulative advantages that become increasingly difficult for followers to overcome. As leaders gain experience and refine their approaches, their advantage accelerates. Market analysis suggests that within three to five years, competitive positions in many industries will be largely determined by AI capabilities.

Australian enterprises face unique opportunities in this landscape. Projections for AI’s contribution to Australian GDP show substantial growth potential through 2030, with sovereign AI markets experiencing particularly strong growth. A window of opportunity remains for organisations to establish leadership positions, supported by significant government investment in AI capabilities. Australia’s strong privacy frameworks create trust advantages, while geographic factors make self-reliance particularly valuable. The nation’s expertise in resource sovereignty provides a foundation for understanding AI sovereignty, while a skilled workforce offers implementation capability.

The total cost of ownership analysis reveals compelling economics. Initial implementation costs for sovereign AI platforms vary based on organisation size and complexity but typically range from hundreds of thousands to several million dollars in the first year, with ongoing operational costs substantially lower. Against this, the cost of inaction includes potential regulatory fines, brand damage from AI failures, accelerating competitive disadvantage, increased talent attrition in organisations seen as AI laggards, and the incalculable impact of cognitive pollution.

Financial analysis of sovereign AI implementations shows strong returns on investment over three-year periods, with payback periods typically under 18 months. These returns are validated by real implementations across banking, government, and retail sectors in Australia.

Governance and Operating Model

Effective AI governance is deeply rooted in established principles of corporate governance, adapted for the unique characteristics of AI systems. The Board of Directors holds ultimate accountability for AI functions, responsible for setting policies and overseeing their implementation.

Four essential principles guide AI governance. Delegation acknowledges that while the Board is ultimately accountable, decision-making authority for AI is delegated downwards to senior executives, managers, and in some cases, directly to the AI system itself. However, it must be explicitly clear that the AI system, as a machine, cannot be held responsible for its output; that responsibility firmly resides with the humans who built, deployed, and operated it.

Escalation recognises that in a rapidly evolving field like AI, Boards cannot anticipate every scenario. Robust escalation mechanisms are vital to ensure that information about critical situations - especially novel or fast-moving issues - is conveyed upwards to the appropriate level for response. This includes formal reporting, alerting, and potentially whistleblower processes that bypass traditional hierarchical structures. Observability refers to the ability to understand the current state of a system.

Modern AI systems generate vast amounts of data on their operations, inputs, outputs, usage, and defects. Effective governance requires specifying the right level of information - through suitable metrics, thresholds, and triggers - for all stakeholders within the enterprise to fulfil their obligations.

Controllability defines how system owners can exert influence and direct the operation of an AI system. Control can be imposed at various stages of the AI lifecycle, from high-level strategic decisions to lower-level technical parameters. For transparent AI, rules are inspectable and modifiable; for opaque black-box systems, different evaluation and assessment approaches are required. To systematically assess AI governance, Boards and executives should continuously address eight key questions across four dimensions: strategic alignment (are we aligned to and informing enterprise strategy?), value creation (are we clear on the business case and realising expected value?), operational performance (are we meeting commitments and improving over time?), and risk management (do we have clarity on risk appetite and are we operating within tolerances?).

The Australian Regulatory Context

Australia’s approach to AI regulation has been relatively “light touch” compared to international peers, with no specific AI Act. The practical effect of AI regulation primarily resides within existing legislation and case law pertaining to specific uses. The Privacy Act 1988 provides comprehensive data protection requirements, while anti-discrimination legislation across age, disability, race, and sex creates a complex compliance landscape. The Competition and Consumer Act 2010 addresses misleading conduct and consumer protection, while sector-specific regulations from APRA, ASIC, and other bodies add additional layers.

In 2019, the Department of Industry, Science and Resources published eight voluntary AI ethics principles promoting human wellbeing, human-centred values, fairness, privacy protection, reliability, transparency, contestability, and accountability. While voluntary, these principles align with international frameworks and guide responsible AI adoption.

A crucial factor in Australia is the concept of a “Social License to Operate” for AI systems. Research indicates that Australia is among the nations most cautious about AI adoption, with less than half of Australians comfortable with its use at work and only a minority believing benefits outweigh risks. Building this social license requires transparent communication, demonstrable benefits, community engagement, ethical safeguards, and regular reporting.

Key Australian stakeholders include the National AI Centre housed within CSIRO’s Data61, Standards Australia developing interoperability standards, the Gradient Institute providing technical expertise with an ethical lens, the Productivity Commission advocating careful regulation, and ASIC expressing increasing concern about algorithmic consumer harm.

Mitigating Black Box Risks

To address the inherent opacity and manipulability of black-box AI models, organisations must adopt sophisticated strategies that go beyond simple explainability tools. The approach must be multi-layered, recognising that technical solutions alone cannot address all risks.

Avoiding over-reliance on single interpretation methods is crucial. Organisations should use multiple complementary tools to gain holistic understanding, cross- validate findings across methods, look for consensus and contradictions, and document confidence levels in all interpretations. Interpretation tools should be considered a last resort when transparency is critical, with preference given to inherently interpretable models where possible.

Practical strategies for enhancing transparency include using enhanced visualisation techniques with confidence intervals, carefully assessing feature dependencies before applying interpretation methods, adopting hybrid approaches that leverage black-box models for feature engineering while using interpretable models for final predictions, and maintaining comprehensive documentation of all model decisions and interpretations.

Regular audits using multiple interpretation methods help cross-validate findings and identify potential manipulation. Investment in training for data scientists and decision-makers on the limitations of interpretation tools is essential, as is the development of clear guidelines for when to use black-box models versus more interpretable alternatives.

Perhaps most critically, organisations must address the human factors in AI interpretation. Research published in behavioural science journals demonstrates that when provided with AI explanations, users exhibit asymmetric learning - reinforcing beliefs confirmed by explanations while resisting abandoning contradicted beliefs. This leads to decreased decision performance and spillover effects to other domains.

Mitigation requires cognitive awareness training to educate users about confirmation bias, careful explanation design that presents multiple perspectives and highlights uncertainty, process controls requiring justification for decisions, and an organisational culture that rewards challenging AI outputs and promotes intellectual humility.

Call to Action: Securing Your Sovereign Future

In a world increasingly shaped by artificial intelligence, the organisations that retain true strategic advantage will be those that maintain full sovereignty over their AI supply chains - from the sourcing of raw materials and hardware, through to the data infrastructure and models themselves. Sovereignty isn’t just a strategic ideal; it’s an operational necessity that extends from soil to silicon to model.

As geopolitical uncertainty and regulatory landscapes evolve, leaders must confront three critical questions. First, who truly controls your AI? If you cannot trace every component of your AI supply chain, from silicon chips to training data to model weights, you lack sovereignty. Every black box represents a vulnerability. Second, are your AI capabilities dependent on opaque, offshore platforms? If your AI runs on foreign infrastructure, uses non-transparent models, or relies on external APIs, your organisation’s cognitive capabilities remain at the mercy of entities beyond your control. Third, are they anchored securely on trusted, domestic foundations? True sovereignty means Australian data, Australian infrastructure, Australian governance, and Australian accountability. Anything less is a compromise.

The future belongs to enterprises prepared to invest in their sovereign capabilities, ensuring control, transparency, and resilience across their entire AI supply chain. Now is the moment to choose a path that safeguards not just your data and infrastructure - but your organisation’s cognitive integrity itself.

Your immediate next steps begin this week with conducting a shadow AI audit across all departments, reviewing network traffic, checking expense reports, and documenting findings. Brief your Board with this whitepaper, highlighting competitive risks, outlining investment needs, and securing sponsorship. Assess current governance gaps by reviewing policies, identifying missing controls, mapping compliance requirements, and prioritising actions.

Over the next 30 days, establish an AI governance committee with executive sponsorship, form a cross-functional team as outlined in this paper, define charter and scope, and set meeting rhythms. Define sovereignty requirements including data residency needs, compliance obligations, performance requirements, and security specifications. Evaluate platform options through vendor briefings, technical assessments, customer references, and business case development.The time for decisive action has arrived. The choice is binary and urgent: embrace sovereign AI today, or surrender your organisation’s cognitive future to foreign algorithms tomorrow.

Take action now. Your cognitive sovereignty depends on it.

Appendices

Appendix A: Glossary of Terms

• Cognitive Pollution: The systemic contamination of organisational judgment through ungoverned AI systems

• Shadow AI: Unauthorised use of AI tools within an organisation, creating unmanaged cognitive delegation

• Sovereign AI: Complete control over AI infrastructure, data, and operations within jurisdictional boundaries

• RAG (Retrieval-Augmented Generation): AI technique combining language models with information retrieval systems

• Black Box AI: AI systems whose decision-making processes are opaque and uninterpretable

• Epistemic Crisis: Fundamental breakdown in how an organisation determines truth and makes decisions

• Strategic Drift: Deviation from organisational truth through reliance on ungoverned AI outputs

• Cognitive Diversity: Diversity of thought and reasoning approaches within human cognition

Social License to Operate: Public acceptance and trust in an organisation’s use of AI systems

Appendix B: Compliance Checklist

Australian organisations must navigate a complex regulatory landscape including the Privacy Act 1988 with its Australian Privacy Principles, anti-discrimination legislation across age, disability, race and sex, the Competition and Consumer Act 2010, sector- specific regulations from APRA, ASIC, ACMA and others, state and territory privacy and surveillance laws, and international compliance requirements where applicable. A comprehensive compliance program addresses each of these areas systematically while maintaining flexibility for evolving regulations.

Appendix C: Further Reading

Essential research includes “Advancing cybersecurity: a comprehensive review of AI- driven detection techniques” from the Journal of Big Data (2024), “Beyond black box AI: Pitfalls in machine learning interpretability” (2024), “Global AI and Data Sovereignty Findings” from EnterpriseDB (2023), and “The Pollution of AI” from Communications of the ACM (2024). Industry reports from Melbourne Business School on enterprise AI governance, OpenAI’s threat intelligence reports, and Australian government resources on AI ethics and responsible AI provide practical implementation guidance. International frameworks from the OECD, UNESCO, IEEE, and ISO offer broader context for sovereign AI development.

Loading...

© 2025 Maincode Pty Ltd. All rights reserved. This whitepaper contains proprietary and confidential information.